Back in 2009 I wrote this post about How to Calculate the Running Totals in SQL Server. Back then(before SQL Server 2012) this was one of the “difficult” queries to write. We used to do that by writing a co-related sub-query or some time even Cursor was used for calculating running totals.

Then Microsoft came up with this much awaited enhancement in OVER() clause in SQL Server 2012. Now the OVER() is extended fully to aggregate functions like SUM, COUNT and AVG. In simple words now we can write ORDER BY in OVER() clause for aggregate functions as well. And this feature has made running total calculations extremely easy and efficient.

On the same line with my previous post, the different scenarios I’ll be covering in this post are -

- Running Total for all records.

- Running Total on each Group of Records.

- Running Total on each Date.

Create Sample Data:

--------------------------------------------------------------------------------------------------------

-- Create Table

CREATE TABLE #Sales

( ShipId INT,

OrderId INT,

ShipDate DateTime,

Quantity INT

)

GO

-- Load Sample Data

INSERT INTO #Sales

VALUES

(1,1, '2015-10-01 12:06:16:820PM',10),

(2,1, '2015-10-02 04:16:16:820PM',15),

(3,2, '2015-10-01 01:26:16:820PM',20),

(4,2, '2015-10-03 02:40:16:820PM',12),

(5,2, '2015-10-04 01:05:16:820PM',15),

(6,3, '2015-10-03 05:25:16:820PM',25),

(7,3, '2015-10-04 03:50:16:820PM',50),

(8,3, '2015-10-05 07:40:16:820PM',30),

(9,4, '2015-10-06 03:30:16:820PM',20),

(10,4, '2015-10-07 04:20:16:820PM',10),

(11,5, '2015-10-05 05:05:16:820PM',35)

--------------------------------------------------------------------------------------------------------

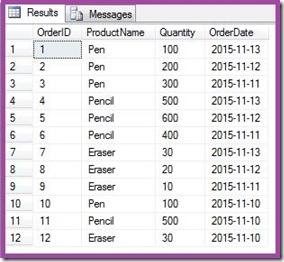

This is how our sample data looks like:

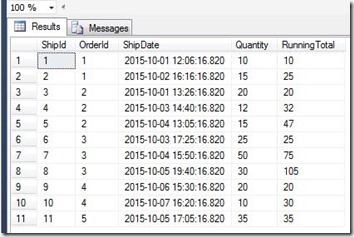

Case I : Running Total For all records. Now this is the simplest case where I’ll show you how to calculate the running totals for all the records in the order of ShipDate. If you remember old ways then we needed to write some weird looking co-related sub query to achieve that. But this can be easily achieved now by simply SUM(Quantity) OVER(ORDER BY ShipDate).

Note: I’m assuming you know the basics of OVER() clause.

--------------------------------------------------------------------------------------------------------

SELECT *

,SUM(Quantity) OVER(ORDER BY ShipDate) AS RunningTotal

FROM #SALES

ORDER BY ShipDate

--------------------------------------------------------------------------------------------------------

Here is the result returned by above query :

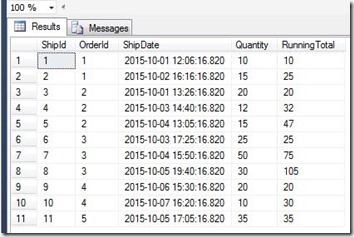

Case II: Running Total on each Group of Records.

Now instead of calculating the running total for each record we will calculate the running total for each OrderId. To do that we just need to ad PARTITION BY OrderID in the OVER clause.

--------------------------------------------------------------------------------------------------------

SELECT *

,SUM(Quantity) OVER(PARTITION BY OrderId ORDER BY ShipDate) AS RunningTotal

FROM #SALES

ORDER BY OrderId, ShipDate

--------------------------------------------------------------------------------------------------------

Output:

Case III: Running Total on each Date.

Now we will see how to calculate the Running Total for each day. For that of course we need to put ShipDate in the PARTITION BY but also need to remove the time portion from the date. We can do that by simply converting ShipDate to DATE format. See below query:

--------------------------------------------------------------------------------------------------------

SELECT *

,SUM(Quantity) OVER(PARTITION BY CONVERT(DATE, ShipDate) ORDER BY ShipDate) AS RunningTotal

FROM #SALES

ORDER BY ShipDate

--------------------------------------------------------------------------------------------------------

Output:

I hope you like this. Do give me your feedback.

Thanks

Mangal Pardeshi