Today I’ll show you some different methods to run a DML operation like UPDATE in small batches for a large table. Though I’m going to show only UPDATE statements, methods are applicable to DELETE and INSERTS as well. When you want to run an UPDATE query(or other DML operations) over a very huge table having over a billion rows or even 100s of Million it is recommended that you should not update them at one go. DML queries on a very large number of rows can cause performance issues, transaction log might get full, affects concurrent users, eats up lot of server resources, runs for hours and many other. Good practice is that you should break the number of records in small batches of few thousand and update them. This way you will use minimum server resources and most importantly you will prevent the transaction log file from getting full.

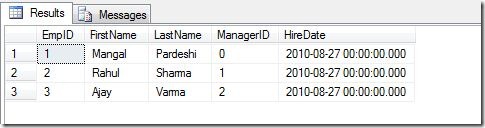

First lets create one table and insert some sample data.

--------------------------------------------------------------------------------------------------------

-- Create the table

CREATE TABLE #A

(

ID INT,

CREATED_DATE DATE,

METHOD VARCHAR(10)

)-- Insert 10000 rows in the table

;WITH MANGAL AS

(

SELECT 1 AS ID, CONVERT(DATE,'20000101') AS CREATED_DATE

UNION ALL

SELECT ID+1, DATEADD(DD, 1, CREATED_DATE)

FROM MANGAL

WHERE ID <= 9999)

INSERT INTO #A(ID, CREATED_DATE)

SELECT ID, CREATED_DATE

FROM MANGALOPTION (MAXRECURSION 10000);

-- Check sample data

SELECT *

FROM #A--------------------------------------------------------------------------------------------------------

This is not the most efficient way to generate some random data using a recursive CTE but I like it so I’m using it.

I. Using a TOP and GO:

This is the simplest method to run a query in a small batches. I frequently use this method in development when I want to update some records quickly without thinking much. Note the GO 10 in the query. An integer after GO will execute the preceding query specified number of times. In this case update statement will execute 10 times(I know there are 10000 rows in the table and I’m using TOP 1000, simple math).

------------------------------------------------------------------------------------------------------

UPDATE TOP (1000) #A

SET METHOD = 'I'

WHERE METHOD IS NULL

GO 10-- Verify result

SELECT *

FROM #A------------------------------------------------------------------------------------------------------

All the queries are quite simple and they are self explanatory so I’m not going to explain them in detail.

II. Using ROWCOUNT and GO:

Same query but without the TOP operator. Here I’m using a ROWCOUNT function to limit the number of rows in the batch. ROWCOUNT causes SQL Server to stop processing the query after the specified number of rows are returned.

------------------------------------------------------------------------------------------------------

SET ROWCOUNT 1000

UPDATE #A

SET METHOD = 'II'

WHERE METHOD <> 'II'

GO 10SET ROWCOUNT 0

-- Verify result

SELECT *

FROM #A------------------------------------------------------------------------------------------------------ IMPORTANT: Using SET ROWCOUNT will not affect DELETE, INSERT, and UPDATE statements in a future release of SQL Server. Avoid using SET ROWCOUNT with DELETE, INSERT, and UPDATE statements in new development work, and plan to modify applications that currently use it. For a similar behavior, use the TOP syntax.

III. Using TOP and GOTO:

Now instead of GO I’ll use GOTO to run the batch multiple times. The GOTO statement causes the execution of the T-SQL batch to stop processing the following commands to GOTO and processing continues from the label where GOTO points. Here I’ll use GOTO to keep processing the particular label until it’s @@ROWCOUNT becomes zero.

------------------------------------------------------------------------------------------------------ UPDATE_BATCH:

UPDATE TOP (3000) #A

SET METHOD = 'III'

WHERE METHOD <> 'III'

IF @@ROWCOUNT > 0 GOTO UPDATE_BATCH-- Verify result

SELECT *

FROM #A

------------------------------------------------------------------------------------------------------

IV. Using ROWCOUNT and GOTO:

Same GOTO concept with ROWCOUNT.

------------------------------------------------------------------------------------------------------

SET ROWCOUNT 3500

UPDATE_BATCH:UPDATE #A

SET METHOD = 'IV'

WHERE METHOD <> 'IV'

IF @@ROWCOUNT > 0 GOTO UPDATE_BATCHSET ROWCOUNT 0

-- verify result

SELECT *

FROM #A

------------------------------------------------------------------------------------------------------

V. Using WHILE and BREAK:

Here I’m taking the help of an infinite WHILE loop ( WHILE 1=1 ) to run the batch multiple times and using the BREAK to exit the loop when @@ROWCOUNT becomes zero.

------------------------------------------------------------------------------------------------------

WHILE (1=1)

BEGINBEGIN TRANSACTION

UPDATE TOP(3333) #A

SET METHOD = 'V'

WHERE METHOD <> 'V'

IF @@ROWCOUNT = 0

BEGIN

COMMIT TRANSACTION

BREAK

END

COMMIT TRANSACTION

END-- Verify result

SELECT *

FROM #A

------------------------------------------------------------------------------------------------------

Same can be implemented using ROWCOUNT as I already shown.

VI. Using a Sequence column and WHILE:

Now an entirely different method. Not using GO, GOTO or BREAK. This method is more systematic where you have more control on how query is going to execute. Note that when you use TOP or ROWCOUNT you actually have no control on which rows are going to get updated. Sorting is completely dependent on the query plan created by the query engine.

------------------------------------------------------------------------------------------------------

DECLARE @C INT

DECLARE @I INT

DECLARE @L INT

DECLARE @MAX INTSET @C = 1

SET @I = 2200

SET @L = @C + @I

SET @MAX = (SELECT MAX(ID) FROM #A)SELECT @C AS C, @I AS I, @L AS L, @MAX AS MAX

WHILE @C < @MAX

BEGIN

UPDATE #A

SET METHOD = 'VI'

WHERE ID >= @C

AND ID < @LSET @C = @L -- OR SET @C = @C + @I

SET @L = @L + @IEND

-- Verify result

SELECT *

FROM #A

------------------------------------------------------------------------------------------------------

VII. Using a date range and WHILE:

On similar lines if we don’t have a reliable sequence column or we want to use a date column to update the records by month or year.

------------------------------------------------------------------------------------------------------

DECLARE @C DATE

DECLARE @I DATE

DECLARE @MAX DATESELECT @C = MIN(CREATED_DATE),

@MAX = MAX(CREATED_DATE)

FROM #ASET @I = DATEADD(YEAR, DATEDIFF(YEAR, 0, @C)+1, 0)

--SELECT @C AS C, @I AS I , @MAX AS MAX

WHILE @C <= @MAX

BEGIN

UPDATE #A

SET METHOD = 'VII'

WHERE CREATED_DATE >= @C

AND CREATED_DATE < @ISET @C = @I

SET @I = DATEADD(YEAR, 1, @I)--SELECT @C, @I

END-- Verify result

SELECT *

FROM #A------------------------------------------------------------------------------------------------------

VIII. Using WHILE without GO, GOTO or BREAK:

One last method from me without using GO, GOTO and BREAK but relatively simpler compare to last 2 methods. Because different people will advise you not to use GO, GOTO and BREAK in SQL.

------------------------------------------------------------------------------------------------------

DECLARE @ROWCOUNT INT = 1

DECLARE @C INT = 2800WHILE (@ROWCOUNT > 0)

BEGIN

UPDATE TOP (@C) #A

SET METHOD = 'VIII'

WHERE METHOD <> 'VIII'SET @ROWCOUNT = @@ROWCOUNT

END-- Verify result

SELECT *

FROM #A

------------------------------------------------------------------------------------------------------

Same can be done using ROWCOUNT.

IX. Final

Well this is the query I found some 4-5 years back when I was searching for same thing on Google. I don’t remember where I saw it or who wrote it.

------------------------------------------------------------------------------------------------------

DECLARE @C INTSET ROWCOUNT 1000

WHILE (1=1)BEGIN

BEGIN TRANSACTION

UPDATE #A

SET METHOD = 'X'

WHERE METHOD <> 'X'SET @C = @@ROWCOUNT

IF @@Error <> 0

BEGIN

Print 'Problem Updating the records'

ROLLBACK TRANSACTION

BREAK

ENDIF @C = 0

BEGIN

COMMIT TRANSACTION

BREAK

END

COMMIT TRANSACTION

END

SET ROWCOUNT 1000

-- Verify result

SELECT *

FROM #A------------------------------------------------------------------------------------------------------

Some notes: Both TOP and ROWCOUNT can be parameterized. We can pass a variable instead of direct number. But of course since ROWCOUNT is soon going to be deprecated from future SQL Server versions one should avoid using it. I was being lazy not to use transactions (commits and rollback ) wherever possible in above examples but one should use them to add more control, clarity to code and also for better understanding.

I have not really tested that which query is better, I leave that up to you. Idea was to share different methods to execute a query in small batches. But I would go for method 6 or 7 on production environment. Do let me know your comments, suggestions and what do think of all these methods.

Namaste

Mangal